Docker 快速搭建kafka集群

导读:一、准备工作1、拉取kafka镜像 docker pull wurstmeister/kafka &...

一、准备工作

1、拉取kafka镜像

docker pull wurstmeister/kafka docker tag docker.io/wurstmeister/kafka kafka docker rmi docker.io/wurstmeister/kafka

2、拉取kafka可视化管理工具镜像

docker pull sheepkiller/kafka-manager docker tag docker.io/sheepkiller/kafka-manager kafka-manager docker rmi docker.io/sheepkiller/kafka-manager

3、安装docker-compose工具

# 升级 pip

pip3 install --upgrade pip

# 指定 docker-compose 版本安装

pip install docker-compose==1.22

# 验证是否安装成功,有返回值,说明安装成功

docker-compose -v

4、创建相关文件夹

mkdir -p /data/docker-compose/kafka mkdir -p /data/docker-data/kafka

5、创建网络,用于kafka和zookeeper共享一个网络段

docker network create --driver bridge zookeeper_kafka_net

6、构建zookeeper集群

kafka集群需要用到zookeeper集群,因此需要先构建zookeeper集群,请查看文章

https://www.jinpeng.work/?id=129

二、使用docker-compose编排kafka集群

1、创建docker-compose.yml

version: '3' services: kafka1: image: kafka restart: always container_name: kafka1 hostname: kafka1 ports: - 9091:9092 environment: KAFKA_BROKER_ID: 1 KAFKA_ADVERTISED_HOST_NAME: kafka1 KAFKA_ADVERTISED_PORT: 9091 KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://192.168.78.200:9091 KAFKA_ZOOKEEPER_CONNECT: zoo1:2181,zoo2:2181,zoo3:2181 volumes: - /data/docker-data/kafka1/docker.sock:/var/run/docker.sock - /data/docker-data/kafka1/data:/kafka external_links: - zoo1 - zoo2 - zoo3 kafka2: image: kafka restart: always container_name: kafka2 hostname: kafka2 ports: - 9092:9092 environment: KAFKA_BROKER_ID: 2 KAFKA_ADVERTISED_HOST_NAME: kafka2 KAFKA_ADVERTISED_PORT: 9092 KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://192.168.78.200:9092 KAFKA_ZOOKEEPER_CONNECT: zoo1:2181,zoo2:2181,zoo3:2181 volumes: - /data/docker-data/kafka2/docker.sock:/var/run/docker.sock - /data/docker-data/kafka2/data:/kafka external_links: - zoo1 - zoo2 - zoo3 kafka3: image: kafka restart: always container_name: kafka3 hostname: kafka3 ports: - 9093:9092 environment: KAFKA_BROKER_ID: 3 KAFKA_ADVERTISED_HOST_NAME: kafka3 KAFKA_ADVERTISED_PORT: 9093 KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://192.168.78.200:9093 KAFKA_ZOOKEEPER_CONNECT: zoo1:2181,zoo2:2181,zoo3:2181 volumes: - /data/docker-data/kafka3/docker.sock:/var/run/docker.sock - /data/docker-data/kafka3/data:/kafka external_links: - zoo1 - zoo2 - zoo3 kafka-manager: image: kafka-manager restart: always container_name: kafka-manager hostname: kafka-manager ports: - 9010:9000 links: - kafka1 - kafka2 - kafka3 external_links: - zoo1 - zoo2 - zoo3 environment: ZK_HOSTS: zoo1:2181,zoo2:2181,zoo3:2181

注:links是引入当前docker-compose内部的service,external_links 引入的是当前docker-compose外部的service

2、执行构建

docker-compose up -d

3、将所有kafka和zookeeper加入一个网络

docker network connect zookeeper_kafka_net zoo1 docker network connect zookeeper_kafka_net zoo2 docker network connect zookeeper_kafka_net zoo3 docker network connect zookeeper_kafka_net kafka1 docker network connect zookeeper_kafka_net kafka2 docker network connect zookeeper_kafka_net kafka3 docker network connect zookeeper_kafka_net kafka-manager

之所以这样指定网络而不是在编排文件中指定网络为一个网络段的原因是因为考虑到后面可能kafka和zookeeper分布在6台机器上,到时使用docker swarm构建docker 集群,网络处理就很方便,不用修改编排文件

4、重启这组服务

因为为每台容器都新增了一个网络,如果不重启,容器用的还是之前的网络,就会导致kafka和kafka-manager是ping不通zookeeper的三个容器的

docker-compose restart

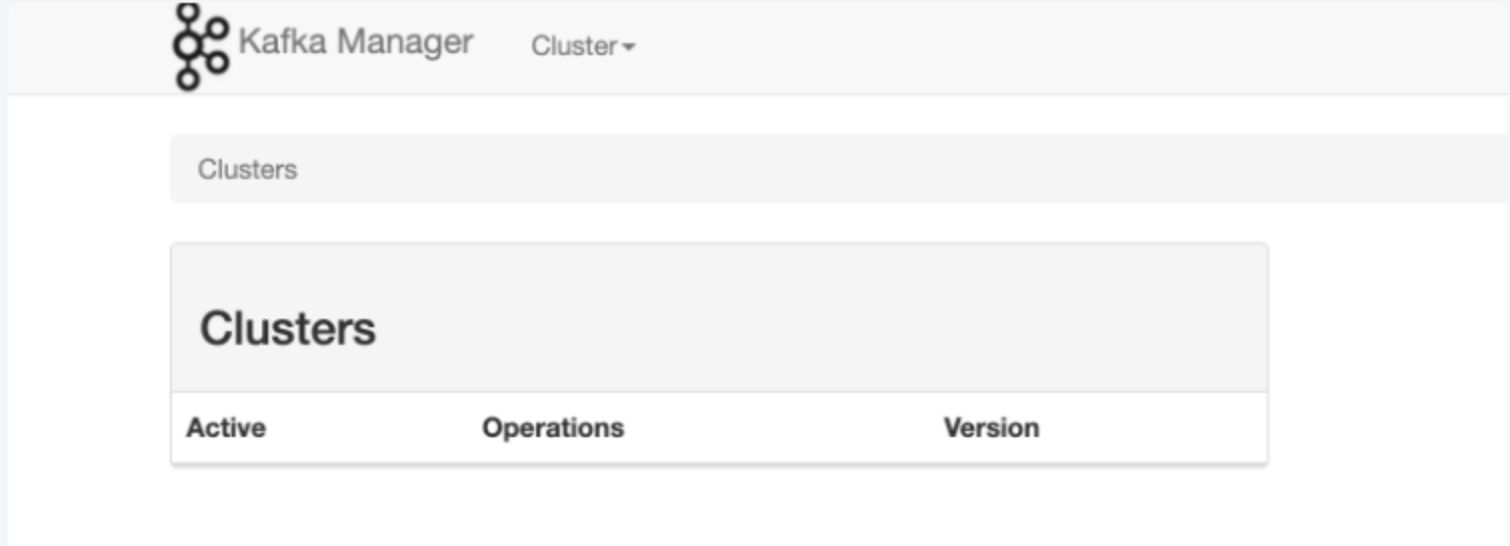

三、验证结果

浏览器访问kafka-manager管理界面

192.168.78.200:9010

群构建好了,并且kafka集群已经完成了和zookeeper集群的互通

本文地址:https://www.jinpeng.work/?id=130

若非特殊说明,文章均属本站原创,转载请注明原链接。

若非特殊说明,文章均属本站原创,转载请注明原链接。